draw decision tree for problems in machine tools

Decision Tree is the most powerful and popular tool for classification and prediction. A Decision tree is a flowchart-like tree structure, where each internal node denotes a test on an attribute, each branch represents an outcome of the test, and each leaf node (terminal node) holds a class label.

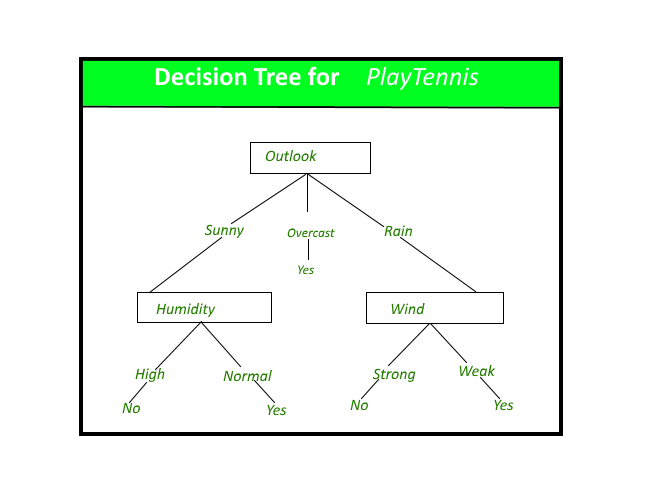

A decision tree for the concept PlayTennis.

Construction of Decision Tree: A tree can be "learned" by splitting the source set into subsets based on an attribute value test. This process is repeated on each derived subset in a recursive manner called recursive partitioning. The recursion is completed when the subset at a node all has the same value of the target variable, or when splitting no longer adds value to the predictions. The construction of a decision tree classifier does not require any domain knowledge or parameter setting, and therefore is appropriate for exploratory knowledge discovery. Decision trees can handle high-dimensional data. In general decision tree classifier has good accuracy. Decision tree induction is a typical inductive approach to learn knowledge on classification.

Decision Tree Representation: Decision trees classify instances by sorting them down the tree from the root to some leaf node, which provides the classification of the instance. An instance is classified by starting at the root node of the tree, testing the attribute specified by this node, then moving down the tree branch corresponding to the value of the attribute as shown in the above figure. This process is then repeated for the subtree rooted at the new node.

The decision tree in above figure classifies a particular morning according to whether it is suitable for playing tennis and returns the classification associated with the particular leaf.(in this case Yes or No).

For example, the instance

(Outlook = Sunny, Temperature = Hot, Humidity = High, Wind = Strong )

would be sorted down the leftmost branch of this decision tree and would therefore be classified as a negative instance.

In other words, we can say that the decision tree represents a disjunction of conjunctions of constraints on the attribute values of instances.

(Outlook = Sunny ^ Humidity = Normal) v (Outlook = Overcast) v (Outlook = Rain ^ Wind = Weak)

Gini Index:

Gini Index is a score that evaluates how accurate a split is among the classified groups. Gini index evaluates a score in the range between 0 and 1, where 0 is when all observations belong to one class, and 1 is a random distribution of the elements within classes. In this case, we want to have a Gini index score as low as possible. Gini Index is the evaluation metrics we shall use to evaluate our Decision Tree Model.

Implementation:

Python3

from sklearn.datasets import make_classification

from sklearn import tree

from sklearn.model_selection import train_test_split

X, t = make_classification( 100 , 5 , n_classes = 2 , shuffle = True , random_state = 10 )

X_train, X_test, t_train, t_test = train_test_split(

X, t, test_size = 0.3 , shuffle = True , random_state = 1 )

model = tree.DecisionTreeClassifier()

model = model.fit(X_train, t_train)

predicted_value = model.predict(X_test)

print (predicted_value)

tree.plot_tree(model)

zeroes = 0

ones = 0

for i in range ( 0 , len (t_train)):

if t_train[i] = = 0 :

zeroes + = 1

else :

ones + = 1

print (zeroes)

print (ones)

val = 1 - ((zeroes / 70 ) * (zeroes / 70 ) + (ones / 70 ) * (ones / 70 ))

print ( "Gini :" , val)

match = 0

UnMatch = 0

for i in range ( 30 ):

if predicted_value[i] = = t_test[i]:

match + = 1

else :

UnMatch + = 1

accuracy = match / 30

print ( "Accuracy is: " , accuracy)

Strengths and Weaknesses of the Decision Tree approach

The strengths of decision tree methods are:

- Decision trees are able to generate understandable rules.

- Decision trees perform classification without requiring much computation.

- Decision trees are able to handle both continuous and categorical variables.

- Decision trees provide a clear indication of which fields are most important for prediction or classification.

The weaknesses of decision tree methods :

- Decision trees are less appropriate for estimation tasks where the goal is to predict the value of a continuous attribute.

- Decision trees are prone to errors in classification problems with many classes and a relatively small number of training examples.

- Decision tree can be computationally expensive to train. The process of growing a decision tree is computationally expensive. At each node, each candidate splitting field must be sorted before its best split can be found. In some algorithms, combinations of fields are used and a search must be made for optimal combining weights. Pruning algorithms can also be expensive since many candidate sub-trees must be formed and compared.

In the next post, we will be discussing the ID3 algorithm for the construction of the Decision tree given by J. R. Quinlan.

This article is contributed by Saloni Gupta. If you like GeeksforGeeks and would like to contribute, you can also write an article using write.geeksforgeeks.org or mail your article to review-team@geeksforgeeks.org. See your article appearing on the GeeksforGeeks main page and help other Geeks.

Source: https://www.geeksforgeeks.org/decision-tree/

0 Response to "draw decision tree for problems in machine tools"

Post a Comment